How to build trust in AI

IBM plays a major role, working with government agencies, in the overall effort towards zero tolerance for AI that can't be trusted

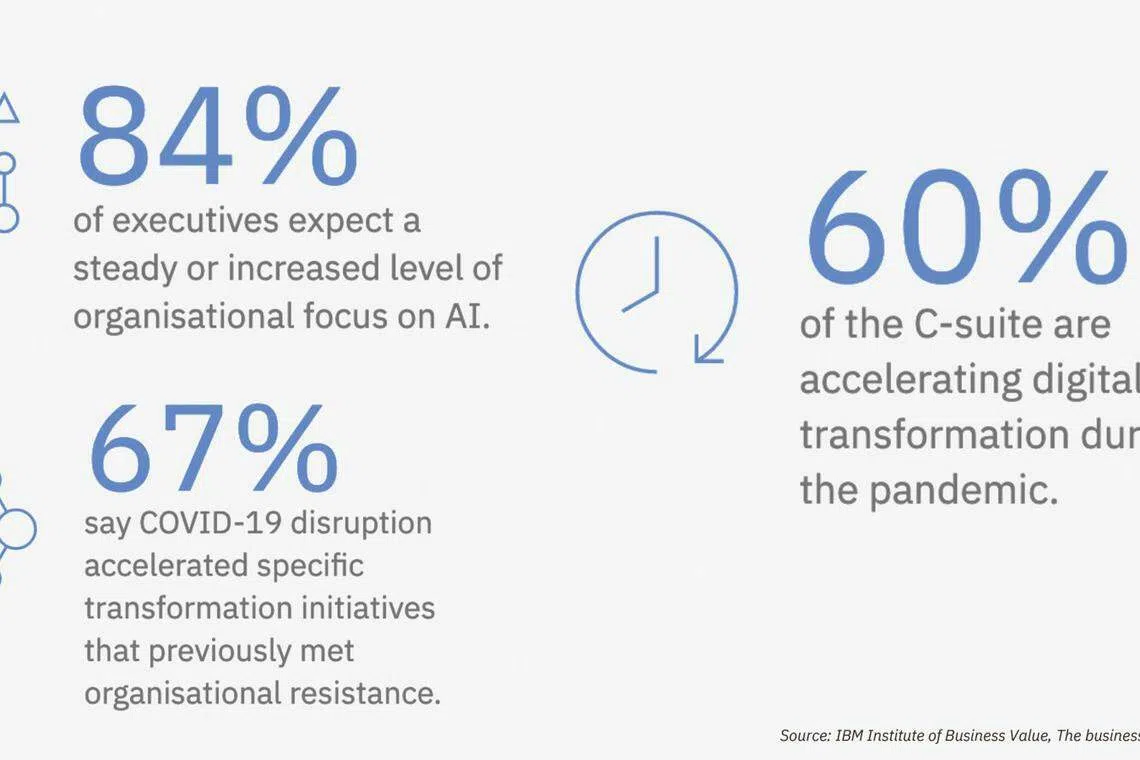

As the world grapples with a global public health crisis and its economic and social fallout, we need the combined power of humans and machines more than ever. Machine intelligence now has two names - machine learning (ML) and artificial intelligence (AI) - both of which have become buzzwords. Businesses are investing big bucks on ML and AI to get them to deliver on their hyped-up potential.

No wonder then that both businesses and governments are set to invest US$98 billion on AI-related solutions and services by 2023 - 250 per cent higher than the US$37.5 billion that they will spend this year, according to International Data Corp (IDC) estimates.

Simply put, AI is about getting computers to perform tasks or processes that would be considered intelligent if done by humans. Which sectors are leading the charge? Retail and banking. Each is set to invest more than US$5 billion this year on AI. Nearly 50 per cent of the retail spending will go for automated customer service agents, expert shopping advisers and product recommendation systems. The banking industry is meanwhile focusing on automated threat intelligence and prevention systems, as well as on fraud analysis and investigation.

But cracks are beginning to appear. A recent survey from Deloitte says that 56 per cent of organisations are slowing their AI adoption because of emerging risks related to AI governance, trust and ethics.

Most companies are ill-prepared because of a shortage of tools and skill sets to ensure that the AI's output is fair, safe and reliable. There are also questions about having access to relevant, clean and unbiased data. Despite the gap between risk and preparedness, many companies are not actively addressing these concerns with the right risk management practices.

Removing Barriers

Navigate Asia in

a new global order

Get the insights delivered to your inbox.

To get those results, we need to remove barriers to AI adoption. For that, AI needs to be trusted and be ethical. Trust allows organisations to manage AI decisions in their business while maintaining full confidence in the protection of data and insights.

The tech sector cannot tackle the issues of ethics in AI alone. Stakeholders must agree to the creation of guard rails so we can trust what the tech offers, ensuring the process is transparent and explainable. Unless we have AI that users can trust, we will have a tougher time solving the world's problems. We're already seeing how AI can help organisations tackle the unforeseen demands of the pandemic.

For example, Delfi makes and sells chocolate confectionery products in Singapore and 13 other countries. It wanted an automated, integrated and self-service reporting solution across all countries. IBM built a data lake, business intelligence (BI) and analytics solution on the cloud for Delfi, enabling a comprehensive view of data for decision-making. "It was a great partnership with IBM to start our big data analytics journey," Thomas Tay, Delfi's Group IT Manager, said. "We need an experienced BI team to help translate Delfi's requirement into a useful visualisation dashboard for the management."

Electrolux tied up with IBM in the Asia-Pacific & Middle-East and Africa regions to provide real-time weather data to help consumers make energy-saving choices about when to use appliances such as clothes dryers, air purifiers and air-conditioners. "Having cutting-edge weather insights means that consumers will enjoy recommendations about their connected appliances tailored specifically and uniquely to where they live," said Gaurav Julka, Electrolux's Senior IT Manager for APAC & MEA. "We help environment-conscious consumers to save energy and make better sustainable decisions."

Singapore's Framework

Singapore has taken an early lead in trying to address these issues. In January 2020, Singapore released the Model AI Governance Framework Second Edition. It discusses transparency and fairness while also having a human-centric element that encompasses well-being and safety components.

On Oct 16, Singapore launched the AI Ethics & Governance Body of Knowledge (AI E&G BoK), a collaboration between the Singapore Computer Society (SCS) and the Infocomm Media Development Authority (IMDA). The BoK is a "living document", with the capability of periodic updates.

The BoK is a reference document that forms the basis of future training and certification for professionals - both in the ICT and non-ICT domains. Singapore is probably one of the first countries in the world to have developed a BoK focused on the ethics of AI. "The BoK is tailored for practical issues related to human safety, fairness and the prevailing approaches to privacy, data governance and general ethical values," according to its authors. "Simply put, this BoK aims to be a kind of directory handbook for three key stakeholders - AI solution providers, businesses and end-user organisations, and individuals or consumers. The need arises because of the rapid advances in AI tools and technologies, and the increasing deployment and embedding of such tools in apps or solutions."

The Business Value of AI

Open Toolkits

IBM is working with government agencies around the world to assess regulatory frameworks. We have also studied those that influence ethical considerations in tech development and deployment. One example: IBM has partnered the University of Notre Dame to establish a Tech Ethics Lab to help scholars and industry leaders to explore and evaluate ethical frameworks and ideas.

One significant finding from this research: the role of the open-source community to foster trusted AI workflows. Open-source is an excellent enabler to building trust because the code and techniques are available for everyone to access. IBM has donated toolkits to the Linux Foundation AI project to enable the broader community to co-create tools under the governance of the Linux Foundation.

Beyond customer care, there is enormous potential for AI to assist companies to reduce the risks of returning to the workplace. Watson Works, a curated set of products which embeds AI into applications, is helping companies manage facilities and optimise space allocation, prioritise employee health, communicate with employees and other stakeholders.

AI has great potential. However, business leaders need to be mindful of issues of trust, transparency and ethics of the technology. The flip side? Getting sufficiently trained talent to handle issues beyond the tech. Jobs are at the heart of every economic recovery, and upskilling is at the core. The rapid increase in demand for AI skills would need companies - and even countries - to ramp up skills-upgrading schemes.

Stepping Up Training

In September, SkillsFuture Singapore (SSG) and IBM tied up to train 800 mid-career professionals on AI and cybersecurity under the IBM-SGUnited Mid-Career Pathways Programme. The skills-focused, blended-learning programme will comprise lectures, lab sessions and industry-based case studies.

In November, IBM signed a memorandum of intent (MOI) with Infocomm Media Development Authority to hire and train 300 Singaporeans in emerging tech areas over the next four years. Participants will receive training for roles such as data scientists and cloud architects under the TechSkills Accelerator (TeSA) Company-Led Training programme and the TeSA Mid-Career Advance programme. Of the 300 positions on offer, 60 are for mid-career professionals, while the other 240 are open to new and mid-career professionals. The partnership is part of ongoing efforts between the government and industry partners to create jobs and training opportunities for Singaporeans.

"Currently, Singapore has adopted an advisory approach to the deployment of AI," writes Dr Yaacob Ibrahim in the preface to the AI BoK. "Together with this BoK, there is enough scope for AI deployment to be unhindered and yet to be mindful of possible ethical issues. Also, it is suitable for specific sectors to issue guidelines which can help members of the sector deploy AI in an ethical and effective way and within existing rules and regulations specific to that sector. For example, the Monetary Authority of Singapore (MAS) has released its FEAT (Fairness, Ethics, Accountability, Transparency) principles to guide the use of AI and data analytics in the financial sector."

Decoding Asia newsletter: your guide to navigating Asia in a new global order. Sign up here to get Decoding Asia newsletter. Delivered to your inbox. Free.

Share with us your feedback on BT's products and services