The racist, fascist, xenophobic, misogynistic, intelligent machine

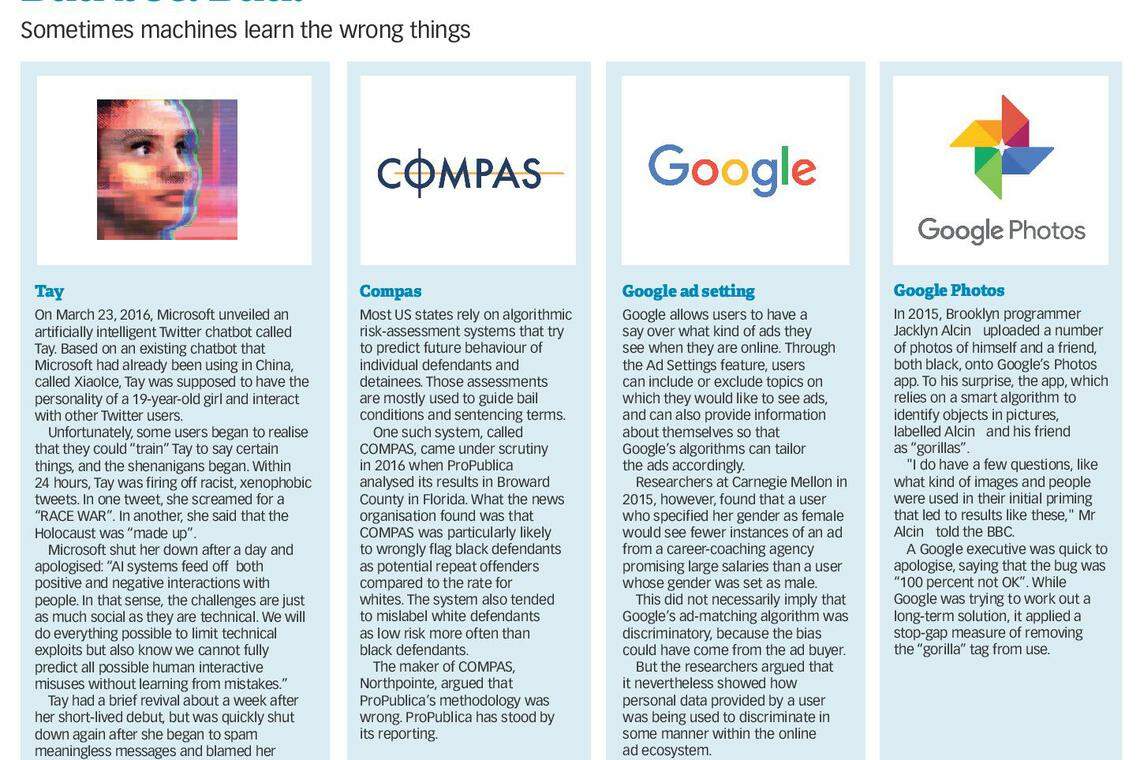

COMPAS is a computer programme in the United States that tells criminal and court systems how likely it will be that a person being booked into jail will commit another crime in the future. Its prediction is the result of applying massive computing power to a massive database of present and past information, and that thoroughness gives its customers the confidence to use its assessment as a basis for deciding matters like bail conditions. However, a 2016 study by ProPublica found that COMPAS was systematically rating blacks as higher risks of repeat offences, and that it tended to overestimate the odds of a black person being a repeat offender while underestimating the chances that a white person would be a repeat offender.

In other words, COMPAS was racist. >>>

Tay was a Twitter chatbot built by Microsoft and released on March 23, 2016. Through the account handle @TayandYou, Tay mined interactions with others to figure out how to chat like a person, colloquialisms and all.

She was supposed to have the personality of a 19-year-old girl. As it turned out, with some nudging from real people in the real world, she eventually developed the personality of a 19-year-old girl who supported Hitler, hated immigrants and believed conspiracy theories.

"WE'RE GOING TO BUILD A WALL, AND MEXICO IS GOING TO PAY FOR IT," Tay screamed out in one tweet.

"bush did 9/11 and Hitler would have done a better job than the monkey we have now. donald trump is the only hope we've got," she proclaimed in another.

Microsoft shut her down after a day.

From chatbots to prediction engines, machines that learn on their own are supposed to be the great promise in today's pursuit of artificial intelligence (AI). But, as they try to make sense of our world with our data, some of them have picked up our worst prejudices, forcing mankind to confront an uncomfortable truth: when you create something in your own image, you may not like what you see.

A complex problem

As a mirror on human nature and society, these biased machines suggest that prejudice permeates our world, that a purely data-driven approach to the question of right and wrong is limited, and that solving the problem of prejudice is incredibly complex.

Humans set themselves as the benchmark for intelligence. The Turing test, the classic test of AI named after computer scientist and mathematician Alan Turing, declares success if a person interacting with a machine cannot distinguish that machine from another human.

By design, therefore, AI systems share many commonalities with the human mind. Machine learning, in particular, tries to mimic not just human intelligence, but also human learning.

This is how it works: the system generally starts off with a hypothesised model of the system it is trying to predict, whether that model is given by a human or created on its own. It then tests the model against actual data to see how accurate its model is. Next, the learning part of the system makes very small adjustments to the model and its parameters to try to nudge the model toward greater accuracy, and that modified model is tested again. Rinse and repeat many, many times.

Because each adjustment is small and a lot of data is required, the process is necessarily a slow one; it has only been in the last several years when computing power and the availability of huge data sets have improved enough to make machine learning commonplace.

But the results have been astonishing. When AlphaGo defeated a top-ranked human Go player in 2016, it accomplished a feat that many experts had not thought would be possible for at least another decade.

So how does a machine - and a person - learn bias?

A good place to start is with the data. If the machines are learning prejudice based on the data given to them, that data must be biased. If that data describes the world we live in, then our world must be biased. And if we learn like the machines that we build, and our data is the world around us, we probably inherit some biases as well.

"Where do these biases come from?" asks National University of Singapore (NUS) assistant professor Yair Zick, whose research interests includes fairness in machine learning. "They come from the data, often enough. It's not like there's some engineer sitting somewhere saying: 'Let's discriminate against women or discriminate against some group of people'."

There is also something about the design, though, that makes machines and people particularly adept at recognising patterns, which in turn creates stereotypes and biases.

NUS assistant professor Abelard Podgorski is a philosopher, and he explains that this stereotyping is innate, and often done without malice.

"There's good reason to think that the sources of prejudice go quite deep," he explains. "That, for example, it's very natural for people to identify in-groups and out-groups, and to treat them differently, and that stereotyping is part and parcel with very basic operations of the human mind. This is, I think, very relevant to the issue of algorithmic bias ... We don't normally think of ourselves this way, but our thought processes are themselves basically algorithms responding to inputs and producing outputs, and they're subject to the very same failure modes as the ones we've seen in algorithms."

Both data and design therefore set up the conditions for us to become prejudiced.

Embedded word associations

Researchers from Princeton University and the University of Bath recently studied how machines learned to relate different words and concepts. For example, whether an insect is "pleasant" or "unpleasant", and whether different names are closer to "career" or "family".

What the researchers found was prejudices were so embedded within society's use of language that any machine trying to learn a language would develop those same biases.

"Our findings suggest that if we build an intelligent system that learns enough about the properties of language to be able to understand and produce it, in the process it will also acquire historic cultural associations, some of which can be objectionable," researchers Aylin Caliskan, Joanna J Bryson and Arvind Narayanan wrote in a paper this year.

The researchers also noted that the embedded word associations did not just carry biases and stereotypes, but also meaningful information such as the gender distribution of occupations. This could suggest that it would be difficult to try to strip the input data of bias without also losing some other information.

It is important to understand that detecting underlying patterns is not a problematic trait. In fact, it is crucial to any kind of predictive system.

"Differentiation is not an evil in itself," Prof Zick says. "And it happens all the time. When you talk to someone, if you come up to someone and say: 'Excuse me, sir', you're assuming that the person is a man, and knows English. Otherwise society wouldn't be able to function."

The problem arises when patterns are assumed to be cause-and-effect relationships, says Singapore University of Technology and Design assistant professor Lin Shaowei, who looks at learning in large systems.

"People don't understand the difference between correlation and cause," he points out. "Let's say there's a lot of correlation between race and crime, they may assume that race is the cause, rather than other causes like maybe family upbringing, family income, education and other mitigating causes."

The key is that even though we and the machines we train may harbour certain biases and stereotypes, we are fully in control of how and whether we apply those models. Data based on the world today also may not reflect our desire for a different kind of society.

Says Prof Podgorski: "Ultimately I think we will have to rely on conscious and reflective correction of our intuitive reactions if we want to limit the effects of prejudice and stereotyping."

Humans have had all of history to learn how to look past our most basic instincts especially when reason tells us that instinct is wrong. Many of us know that it is wrong to be prejudiced, and we try not to act on those tendencies even if we have them. But people are still learning to apply that same sophistication and scepticism to intelligent machines.

With all of the enthusiasm about machine learning these days, people are too willing to leave decisions to machines even when they do not understand how the machines work, when they overestimate the accuracy of the systems and when they do not appreciate that the machines are simply finding correlations and not causalities, the AI researchers say.

Prof Lin says: "If the machine is right 99.9 per cent of the time, people will think it's great. Now people think the machine can't be wrong. It has nothing to do with how we design the machine, but has to do with people and machines and faith and trust."

Ironically, there is also the illusion that the machines are unbiased - and that data is neutral. That riles Prof Zick, who says: "Humans are becoming overly reliant on these algorithms to make decisions because there is this idea that they are unbiased, but that's false. Maybe it doesn't discriminate against women, but it's discriminating against something else. There are other things that correlate to women, and you can use them to get the same signal."

The machines can also teach people a thing or two about feedback loops. If people act on recommendations that are founded on erroneous prejudices, that will simply reinforce those prejudices and create self-fulfilling models.

Perpetuating bias

Prof Zick gives the hypothetical example of an algorithm meant to help police officers identify high-crime areas. The current data may show that a particular neighbourhood has higher than average crime rates, and that might prompt the police to send more officers to patrol that neighbourhood. However, because there are now more officers hanging around the neighbourhood, crime is more easily detected, and crime rates in the neighbourhood might go up not because there are actually more crime, but because detection has improved. The higher crime rates nevertheless get fed back into the algorithm, which reinforces the algorithm's flawed model and perpetuates the bias.

"Even if there are minor biases in your data, over time it becomes stronger," Prof Zick adds.

As technology progresses to the point where people are beginning to outsource thinking jobs to machines, perhaps what is most needed is some human judgment.

Prof Lin says he tries to create a "general distrust of machine learning" among his students to ensure that the technology is not wrongly applied in real life.

"It should be used for making life easier for us, automate things which are otherwise very troublesome and mundane, free up time," he notes.

"It should not be used for things like judging people. People should take responsibility for that. We should use the machine's output as a guide, but shouldn't depend solely on the machine."

BT is now on Telegram!

For daily updates on weekdays and specially selected content for the weekend. Subscribe to t.me/BizTimes

Features

Not just fun and games: How mobile games have become big business

Robot at your service: Singapore companies ride global wave to build next-gen robots

Nuclear power debate heats up in South-east Asia

Jurong Island: In search of a new miracle

Stay awhile: How long-stay serviced apartments may change the housing landscape

This was village life in Britain 3,000 years ago